Emdoor & IDEA Research Institute Unveil UniTTS: A Breakthrough End-to-End AI Voice Model to Revolutionize On-Device Human-Computer Interaction

Originally by: Emdoor Research Institute | July 03, 2025

In the modern digital landscape, the interface between humans and machines is increasingly defined by voice. From smartphone assistants to smart home controls, voice interaction technology is rapidly reshaping our daily lives. However, a persistent challenge remains: achieving truly natural, fluid, and emotionally resonant communication with our devices. The robotic, monotone nature of many existing systems highlights a critical gap.

Traditional voice interaction systems often struggle to fully capture and utilize the rich, non-verbal information embedded in human speech. These "paralinguistic features"—such as timbre, prosody, and emotion—are essential for natural communication but are frequently lost in translation by machines. This results in synthesized speech that lacks the authenticity and expressiveness we expect. As artificial intelligence advances, user expectations have evolved; we no longer want a machine that simply understands commands, but one that can communicate with personality and emotional nuance.

To shatter these limitations and usher in a new era of intelligent on-device voice interaction, the Emdoor Research Institute, in a landmark collaboration with the Guangdong-Hong Kong-Macao Greater Bay Area Digital Economy Research (IDEA) Institute's joint laboratory (COTLab), has developed UniTTS, a series of powerful, end-to-end speech large models.

The Core Challenge: Beyond Words to Holistic Audio Understanding

One of the dominant approaches in modern Text-to-Speech (TTS) modeling relies on Large Language Models (LLMs) processing discrete audio codes. The effectiveness of this method hinges entirely on the quality of the audio's discrete encoding scheme. Many researchers attempt to separate acoustic features from semantic (content) features. However, this decoupling is fundamentally flawed. Not all speech information can be neatly categorized. For example, powerful emotional expressions like laughter, crying, or sarcasm are holistic audio events where acoustics and semantics are intrinsically linked. Furthermore, high-quality "universal audio" data, which includes rich background sounds or sound effects, defies simple separation.

While some have adopted multi-codebook solutions like GRFVQ-based methods to improve performance, this dramatically increases the bitrate of the discretized audio sequence. The resulting lengthy sequences significantly amplify the difficulty for LLMs to model the relationships within the audio, making low bitrate a critical metric for on-device performance.

To address this, our work introduces DistilCodec and UniTTS. DistilCodec is a novel single-codebook encoder trained to achieve nearly 100% uniform codebook utilization. Using the discrete audio representations from DistilCodec, we trained the UniTTS model on the powerful qwen2.5-7B backbone.

Our key contributions are:

A Novel Distillation Method for Audio Encoding: We successfully employ a multi-codebook teacher model (GRVQ) to distill its knowledge into a single-codebook student model (DistilCodec). This achieves near-perfect codebook utilization and provides a simple, efficient audio compression representation that doesn't require the decoupling of acoustic and semantic information.

A True End-to-End Architecture (UniTTS): Built upon DistilCodec's ability to model complete audio features, UniTTS possesses full end-to-end capabilities for both input and output. This allows the audio generated by UniTTS to exhibit far more natural and authentic emotional expressiveness.

A New Training Paradigm for Audio Language Models: We introduce a structured methodology:

Audio Perception Modeling: The training of DistilCodec, which focuses solely on feature discretization using universal audio data to enhance its robustness.

Audio Cognitive Modeling: The training of UniTTS, which is divided into three distinct phases: Pre-training, Supervised Fine-Tuning (SFT), and Alignment. This process leverages DistilCodec's complete audio feature modeling by incorporating a universal audio autoregressive task during pre-training. It also systematically validates the impact of different text-audio interleaved prompts during SFT and uses Direct Preference Optimization to further refine speech generation quality.

UniTTS & DistilCodec: The Technical Architecture

UniTTS System Architecture

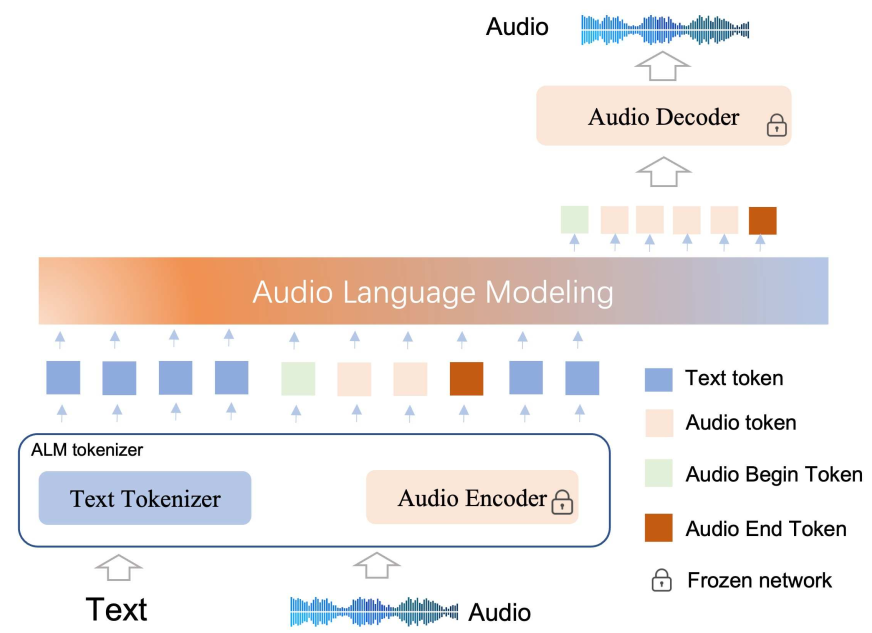

The UniTTS architecture is composed of two primary components: the ALM (Audio Language Model) Tokenizer and the Transformer-based Backbone.

ALM Tokenizer: This includes a standard Text Tokenizer for processing text and our innovative Audio Encoder (DistilCodec) for discretizing and reconstructing audio.

Backbone: This leverages a decoder-only Transformer architecture (qwen2.5-7B) to perform alternating autoregression across the two modalities of tokens (text and audio).

The model's vocabulary was expanded from its original size to 180,000 tokens to accommodate an additional 32,000 dedicated audio tokens generated by DistilCodec.

The DistilCodec Structure: Efficiency Through Distillation

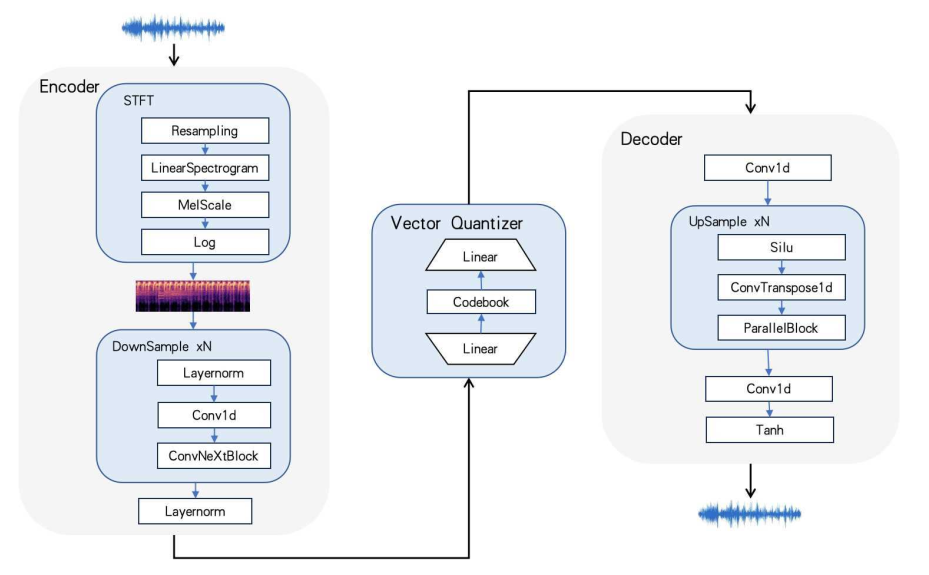

The DistilCodec Structure

DistilCodec's network, as shown above, first converts raw audio into a spectrogram via a Fourier transform. This spectrogram is then passed through a stack of residual convolutional layers for feature compression. A quantizer, using a linear layer, projects these compressed features into the vicinity of a codebook vector. The index of the nearest vector becomes the discrete representation for that audio segment. For reconstruction, a GAN-based network reverses this process to generate the corresponding audio waveform.

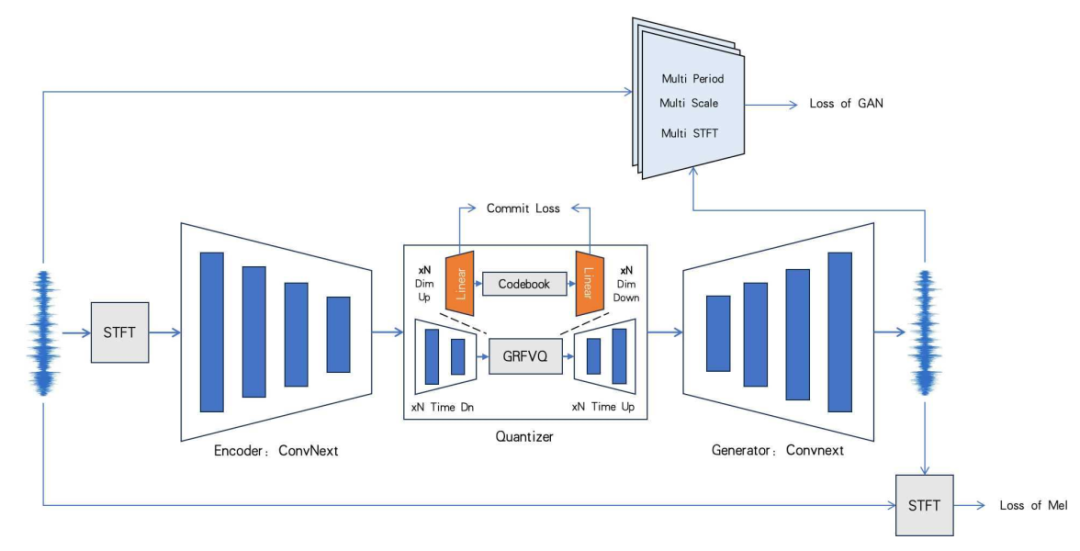

The training process for DistilCodec.

The training process for DistilCodec is unique. We first train a "Teacher Codec" that uses a combination of GVQ, RVQ, and FVQ with 32 distinct codebooks. We then initialize a "Student Codec"—our DistilCodec—with the parameters from the Teacher's encoder and decoder. This Student Codec has a residual and group value of 1, making it a single-codebook model, but its codebook size is the sum total of the teacher's, allowing it to capture immense acoustic diversity in a highly efficient structure.

The Three-Stage Training Paradigm of UniTTS

Modeling audio presents a much larger representation space than text alone. Therefore, access to large-scale, high-quality text-audio paired data is a prerequisite for achieving general-purpose audio autoregression.

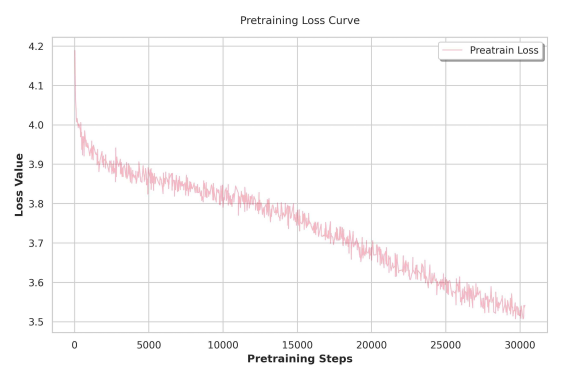

Stage 1: Pre-training

UniTTS employs a multi-stage pre-training strategy.

Phase One: We start with a pre-trained text-based LLM and introduce text data, universal audio data, and a limited amount of text-audio paired data. This phase teaches the model the fundamentals of audio modeling. A key challenge here is "modality competition," where introducing audio data can cause the model's original text generation capabilities to degrade.

Phase Two: To counteract this, we combine text-based instruction datasets with our existing universal audio and text-audio datasets. This reinforces and enhances the model's text-generation abilities while solidifying its audio skills.

Context Expansion: To accommodate the long-sequence nature of audio data, we expanded the model's context window from 8,192 to 16,384 tokens.

Pre-training loss curve

Stage 2: Supervised Fine-Tuning (SFT)

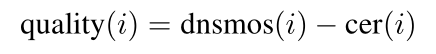

The quality of data during SFT significantly impacts the final model's capabilities. Existing open-source text-audio datasets have notable flaws, including noisy ASR-generated labels and long, unnatural silences from sources like audiobooks. To overcome this, we designed a practical composite quality scoring method to filter and rank training samples:

Here, dnsmos(i) effectively filters for acoustic quality, while cer(i) (Character Error Rate from re-annotation) filters out samples with inaccurate labels. By re-ranking and applying a threshold based on this quality score, we drastically improved the quality of our training data.

Stage 3: Preference Alignment

While SFT helps the model learn specific speech patterns, it can sometimes lead to issues like unnatural prosodic prolongation or repetition—an auditory equivalent of the "parroting" seen in text-only LLMs. To refine this, we adopted preference optimization. However, standard Direct Preference Optimization (DPO) can be unstable for long-sequence audio modeling and may lead to mode collapse.

Preference Alignment

Therefore, UniTTS introduces Linear Preference Optimization (LPO) as a more stable alternative. In the LPO loss function, where x1 and x2 represent positive and negative samples, the model refines its policy gradient by gently promoting the positive sample's policy while suppressing the pass-through estimation for both samples. This stabilizes the preference optimization process for long audio sequences, leading to more robust and natural outputs.

Experimental Results: A New State-of-the-Art

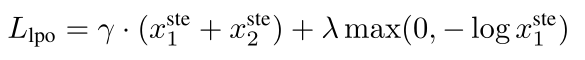

We evaluated DistilCodec's perplexity (PPL) and codebook utilization (Usage) on the LibriSpeech-Clean dataset and our self-built Universal Audio dataset. The results confirm that DistilCodec achieves nearly 100% codebook utilization, a near-perfect result, on both speech and general audio datasets.

Comparison of code book rate, usage rate, and confusion rate

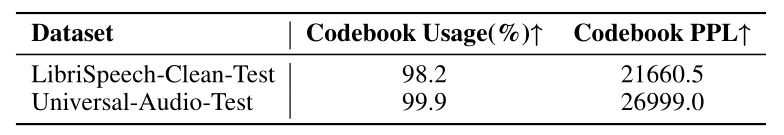

Furthermore, a comprehensive analysis on the LibriSpeech-Clean-Test benchmark demonstrates DistilCodec's superior speech reconstruction capabilities. At a highly efficient bitrate of around 1KBPS, DistilCodec achieves state-of-the-art (SOTA) performance on the STOI metric, indicating excellent speech intelligibility.

Comprehensive comparison of different Codec models

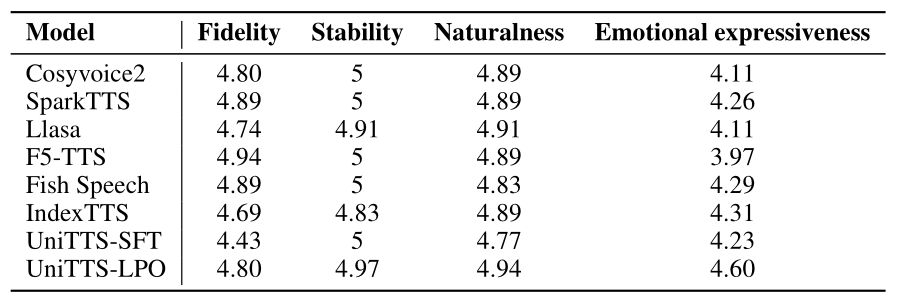

To conduct a rigorous evaluation of the complete system, we compared UniTTS against a suite of existing leading methods, including CosyVoice2, Spark-TTS, LLaSA, F5-TTS, and Fish-Speech. The results unequivocally show that UniTTS-LPO, the final aligned model, achieves comprehensive improvements in emotional expressiveness, fidelity, and naturalness when compared to the SFT-only version and all other competing models. This validates the effectiveness of our distillation-driven codec, holistic feature modeling, and advanced LPO training methodology.

The Emdoor Advantage: From Research Lab to Rugged Reality

This research isn't just an academic exercise. For a company like Emdoor, a leader in rugged computing solutions, the development of UniTTS is a strategic move to redefine on-device human-computer interaction in the world's most demanding environments.

The efficiency of DistilCodec and the power of UniTTS are perfectly suited for the edge computing scenarios where Emdoor devices excel. Consider the real-world applications:

Field Service & Manufacturing: A technician in a noisy factory can issue complex, natural language commands to their rugged tablet, receiving clear, calm, and contextually appropriate synthesized audio feedback, even over the sound of heavy machinery.

First Responders & Public Safety: Paramedics can interact with their devices hands-free, receiving critical patient data read aloud with a tone that conveys urgency without causing panic. Police officers can operate in-vehicle systems with fluid voice commands, keeping their hands and eyes on the situation.

Logistics & Warehousing: Workers operating forklifts or managing inventory can communicate with the warehouse management system via voice, improving efficiency and safety without needing to stop and use a keypad.

The on-device nature of UniTTS means these interactions can happen instantly, without reliance on a stable cloud connection—a critical requirement for mobile and field operations. By integrating this technology into their rugged laptops, tablets, and handhelds, Emdoor is poised to deliver a user experience that is not only more efficient but also fundamentally more human.

Conclusion: The Future of Voice is Here

Through its highly efficient discrete encoding technology, DistilCodec has achieved near-perfect utilization of a single codebook, laying a robust foundation for versatile and adaptive audio LLMs. Building on this, the UniTTS model, with its stable three-stage cross-modal training strategy, represents a significant leap forward.

In the context of human-computer interaction, UniTTS does more than just improve the naturalness and fluency of voice exchange. It brings a new dimension of emotion and personality to the user experience, transforming devices from simple tools into intuitive, responsive partners. This collaboration between Emdoor Research Institute and IDEA Research Institute is not merely an innovation in AI; it is the blueprint for the future of on-device interaction.